The activation function will decide if a neuron should be activated or not.

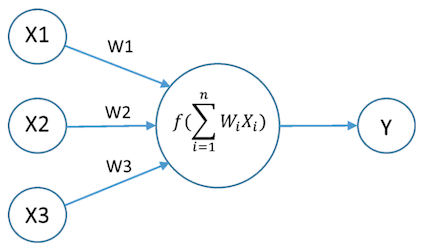

A neuron receives a set of inputs, and each input has its own weight. The total input for that neuron is the sum between the input * weight-of-input:

let totalInput = 0

inputs.forEach(

({value, weight}) => totalInput += value*weight

)By the way, this would be a perfect place to use a Javascript array reducer.

After this step, the total input that enters the neuron is taken as an argument by the activation function and the output is sent to the linked neurons.

let output = neuron.activationFunction(totalInput)

The output can be anything between:

- values 0 and 1 for binary classification problems like is the person wearing a face mask or not

- probabilistic values for multiclass classification problems like there are 60% chances that this object is a car

- predictions values like what will be the price of a given item

Setting the activation function in TensorlfowJs

For any layer, we can set an activation function like so:

tf.layers.activation({activation: 'relu6'})Types of activation functions in TensorlfowJs

The TensorlfowJs documentation provides the following list of predefined activation functions:

'elu'|'hardSigmoid'|'linear'|'relu'|'relu6'|'selu'|'sigmoid'

|'softmax'|'softplus'|'softsign'|'tanh'|'swish'|'mish'Found these two videos (link 1 and link 2) quite useful as a good starting point for explaing the differences between the main activation functions for neural networks.

📖 50 Javascript, React and NextJs Projects

Learn by doing with this FREE ebook! Not sure what to build? Dive in with 50 projects with project briefs and wireframes! Choose from 8 project categories and get started right away.

📖 50 Javascript, React and NextJs Projects

Learn by doing with this FREE ebook! Not sure what to build? Dive in with 50 projects with project briefs and wireframes! Choose from 8 project categories and get started right away.