One of the main tasks where machine learning exceeds is recognizing given patterns in an image. So, for today, our aim is to build a simple Javascript application that can predict what are the objects from a given image.

For this, we will use TensorflowJs with a pre-trained machine learning model and just pure old vanilla Javascript with no frameworks.

In the video below you can see how the final app will work:

This example will focus on identifying just the main object from an image. I've made also an example of how we can use TensorflowJs to detect multiple objects in a picture.

Let's get down to business!

Setting up the starting HTML and Javascript

The boilerplate HTML is pretty straightforward.

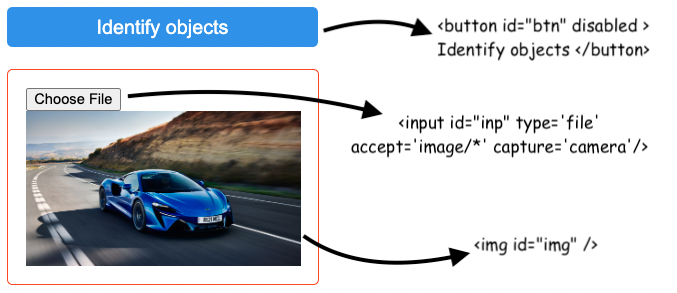

We will need a file input to choose the image file, an actual img element to preview the uploaded image, and a button that will trigger object detection from the TensorflowJs model.

<button id="btn" disabled >Identify objects</button>

<div class="container">

<input id="inp" type='file' accept='image/*' capture='camera'/>

<img id="img" />

</div>The .container div is there just for styling purposes.

Given that loading the TensorflowJs image classification model is not a blocker for the actual image upload we can have this functionality ready right from the start.

const input = document.querySelector('#inp')

const img = document.querySelector('#img')

input.addEventListener('change', () => {

const url = window.URL.createObjectURL(input.files[0])

img.src = url

})Just the Identify objects button will be disabled until the model is fully loaded.

Loading the Mobilenet TensorflowJs model

As in the case of the BERT Question and Answer model we will use a pre-trained Tensorflow model.

For this example, we will use Mobilenet, a model trained for image classification.

Importing the model is pretty straightforward:

import * as mobilenet from "https://cdn.skypack.dev/@tensorflow-models/mobilenet@2.1.0";We will also need to import the actual TensorFlow library in order to use the Mobilenet model.

The next step will be to load the model. When the asynchronous loading of the model is done we can enable the button that will trigger the object identification:

const button = document.querySelector('#btn')

const enableUi = (model)=> {

button.disabled = false

button.addEventListener('click', () => {

// use the model here

})

}

mobilenet.load().then(enableUi)Identifying objects in images with TensorflowJs

With all of this in place, the final step will be to use the Mobilenet TensorflowJs model.

The Mobilenet model has a classify() asynchronous method that gets as input the image and returns an array with the indefinite object and a score for how sure it is about that identification.

The result will contain an array of Javascript objects similar to the one below:

[{

className: "Egyptian cat",

probability: 0.8380282521247864

}, {

className: "tabby, tabby cat",

probability: 0.04644153267145157

}, {

className: "Siamese cat, Siamese",

probability: 0.024488523602485657

}]So, all that we need to do is something like this:

button.addEventListener('click', () => {

model.classify(img).then(

e => alert(JSON.stringify(e))

)

})And that's it! You can check out the full code in this codepen and if you are interested to see how to train a TensorflowJs model from scratch you can check out this tutorial I've written.

📖 50 Javascript, React and NextJs Projects

Learn by doing with this FREE ebook! Not sure what to build? Dive in with 50 projects with project briefs and wireframes! Choose from 8 project categories and get started right away.

📖 50 Javascript, React and NextJs Projects

Learn by doing with this FREE ebook! Not sure what to build? Dive in with 50 projects with project briefs and wireframes! Choose from 8 project categories and get started right away.